|

|

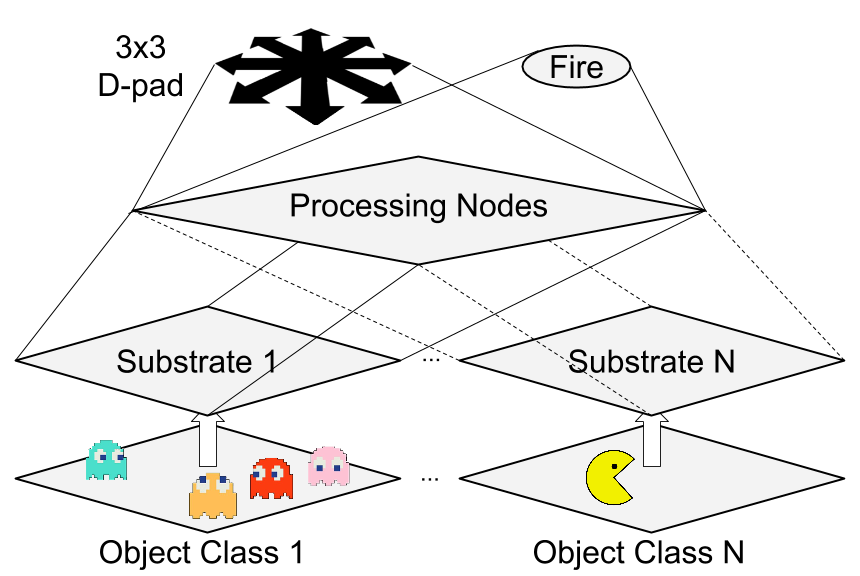

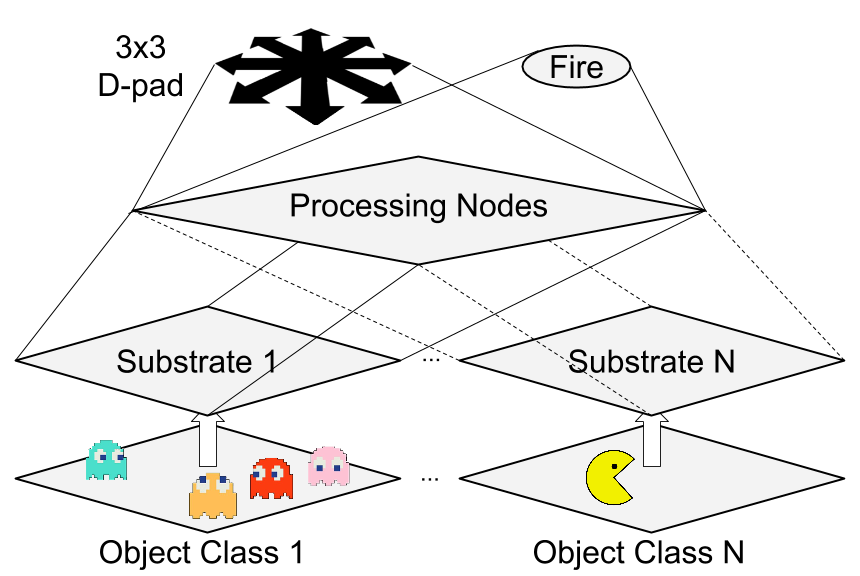

The network consists of a Substrate Layer, Processing Layer, and Output Layer. At each new frame the Atari game screen is processed to detect the on-screen objects. These objects are classified into different categories (ghosts and Pac-Man in this example). There is one substrate for each object category. The two-dimensional (x,y) locations of the objects in each category on the current game screen activate substrate node(s) corresponding to the (x,y) location of each object. Substrate activation is shown by the white arrows. Activations are propagated upwards from the Substrate Layer to the Processing Layer and then to the Output Layer. Actions are read from the output layer by first selecting the node with the highest activation from the directional substrate (D-pad), then pairing it with the activity of the fire button. By pairing the joystick direction and the fire button, actions can be created in a manner isomorphic to the physical Atari controls.

Gameplay proceeds in this fashion until the episode terminates - due either to a "Game Over" or reaching a 50,000 frame cap. At the end of the game, the emulator reads the score from the console RAM. This score is the fitness that is assigned to the agent. A population of one-hundred agents is maintained and evolved for 250 generations. At the end of each generation, crossover and mutation are performed to create the next generation. Emphasis is placed on (1) Allowing the best agents in each generation to reproduce and (2) Maintaining a diverse population of solutions. The videos below show the best or champion agent playing the selected video game after 250 generations of evolution.

Evolved policies achieve state-of-the-art results, even surpassing human high scores on three games. More information about NEAT, HyperNEAT, CNE, and CMA-ES as well as alternate state representations can be found in the paper. Code is available at https://github.com/mhauskn/HyperNEAT.

A number of evolved players discovered interesting exploits or human-like play:

Infinite score loops were found on the games Gopher, Elevator Action, and Krull. A finite score was acquired for agents on these domains due only to the 50,000 frame cap on any episode. The score loop in Gopher, discovered by HyperNEAT, depends on quick reactions and would likely be very hard for a human to duplicate for any extended period of time. Similarly Elevator Action, discovered by CNE, requires a repeated sequence of timed jumps and ducks to dodge bullets and defeat enemies. The score loop in Krull, discovered by HyperNEAT, seems more likely to be a design flaw as the agent is rewarded an extra life after completing a repeatable sequence of play. Most Atari games take the safer approach and reward extra lives in accordance with (exponentially) increasing score thresholds.

The following Fixed-Topology-NEAT agents beat human high scores listed at jvgs.net: